A few weeks ago I was on a call with an SRE at a major eCommerce company. They've been running a six-month proof-of-concept with one of the big AI-powered root cause analysis platforms.

The vendor keeps showing them amazing success stories, and they have seen it help with some incidents. But for every time it points them in the right direction, there are multiple cases where it sends their on-call engineers down completely wrong paths, burning valuable time during critical incidents before they give up and start over with manual investigation.

They paused and told me the worst part: they still can't decide if it's actually worth deploying. Six months of testing and they're no closer to a decision.

This conversation happens all the time. Infrastructure teams are drowning in AI vendor pitches and internal pressure to "do something with AI," but they're missing a framework to evaluate which tools will actually work in their environment.

The problem isn't about finding more accurate AI models. It's about confidence.

Why Infrastructure AI Adoption Fails

Most AI initiatives fail in infrastructure environments for predictable reasons that have nothing to do with model performance:

The Demo vs Reality Gap: AI tools that work perfectly in controlled vendor demos fall apart when they encounter the complexity of real production systems.

Your infrastructure has hundreds of different services talking to each other in ways that weren't documented, legacy systems that can't be replaced, and dependencies that span multiple cloud accounts.

The Accuracy Trap: Teams get obsessed with finding AI that's "accurate enough" while ignoring how they position the AI in their workflow.

A 99% accurate AI making autonomous production changes will cause outages 1% of the time - catastrophic when those changes affect live systems.

An 85% accurate AI helping humans review changes costs almost nothing when it's wrong (you ignore bad suggestions) but still delivers value when it's right.

The positioning matters more than the raw accuracy numbers.

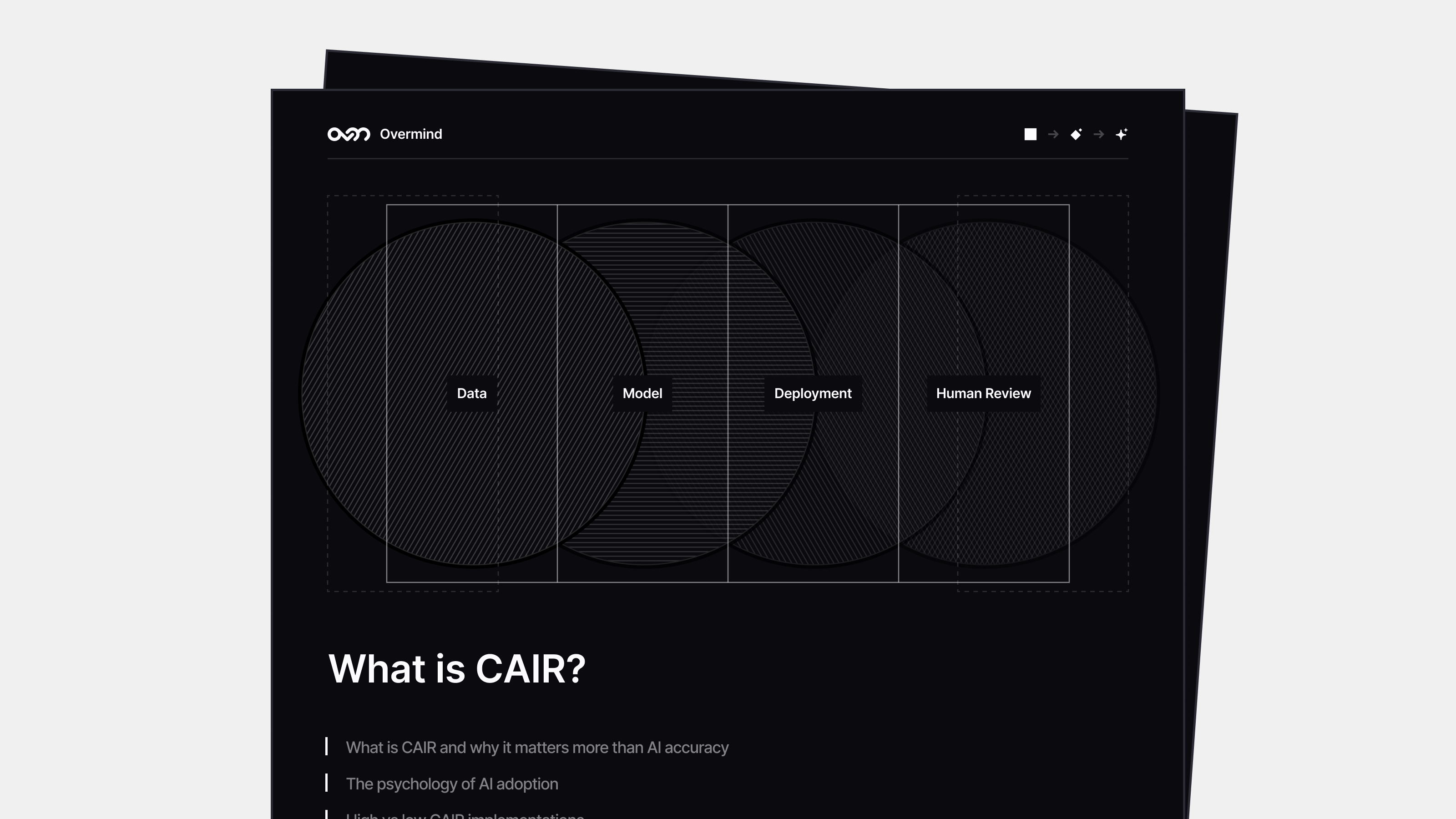

Introducing CAIR: The Framework Your Infrastructure Team Needs

There's a framework that can predict whether AI tools are worth investing in for infrastructure teams. It's called CAIR - Confidence in AI Results, developed by the team at LangChain to predict the success of AI products.

While originally created for general AI product development, CAIR turns out to be an excellent predictor of whether AI will actually deliver value within DevOps workflows.

CAIR measures user confidence through a simple relationship:

CAIR = Value of Success ÷ (Risk of Failure × Effort to Fix)

This framework shifts focus from pure technical metrics to the confidence drivers that actually determine adoption, and therefore value.

CAIR in Action: Infrastructure Examples

Let me show you how this plays out across different infrastructure use cases:

Very High CAIR: Code Generation for Infrastructure

Example: Using AI to generate Terraform configurations or CloudFormation templates with human review.

- Value: High (saves hours on boilerplate code)

- Risk of Failure: Low (generated locally, version controlled, reviewed before deployment)

- Effort to Fix: Low (edit the generated code)

CAIR = High ÷ (Low × Low) = Very High

This works because you get massive time savings in a completely safe environment.

Even if the AI generates terrible code, fixing it is trivial and there's no production impact.

Low CAIR: Autonomous Infrastructure Changes

Example: AI systems that automatically scale resources, modify security groups, or deploy changes without approval.

- Value: High (operational efficiency, reduced toil)

- Risk of Failure: High (outages, security vulnerabilities, cascading failures)

- Effort to Fix: High (complex rollback procedures, incident response, customer impact)

CAIR = High ÷ (High × High) = Low

Even small mistakes have massive consequences. This is why most "autonomous infrastructure" initiatives fail - the risks simply don't justify the outcomes.

Five Strategies for Building Confidence in Infrastructure AI

The original CAIR blog suggests these 5 strategies for how to integrate AI in a way that increases the CAIR score, and therefore the value to your platform:

1. Strategic Human Checkpoints

Rather than full automation, place human decision points where they add the most value.

Use approval workflows for production changes, human review of security modifications, and oversight for cross-service dependencies.

AI suggests infrastructure optimizations, but humans approve each change before execution. Preventing AI from making irreversible mistakes dramatically reduces Risk of Failure.

2. Leverage Your Rollback Expertise

Infrastructure teams already excel at making changes reversible through IaC versioning, blue-green deployments, and automated rollback procedures.

Apply this strength so that AI-generated changes integrate with existing workflows for instant reversion. When AI makes a mistake, you can roll back immediately instead of manual troubleshooting, minimizing Effort to Fix.

3. Build Safe Testing Environments

Isolate AI experimentation from production through staging environments, account isolation strategies, and canary deployment patterns.

AI tools should operate on staging replicas before touching anything real. Since AI mistakes can't affect production systems, you eliminate Risk of Failure entirely.

4. Maintain Operational Visibility

Infrastructure teams need audit trails and clear reasoning for troubleshooting and compliance. AI recommendations must include detailed explanations: "Suggesting this security group change because traffic analysis shows port 8080 hasn't been used in 90 days."

When you can assess if AI reasoning makes sense and know exactly what to fix when AI is wrong, you reduce both Risk of Failure and Effort to Fix.

5. Progressive Trust Building

Start with read-only insights, progress to suggested changes, eventually enable approved automation for routine tasks. Gradually building confidence as you see AI perform well in safer contexts maximizes Value while keeping Risk of Failure low.

The CAIR-First Approach to AI Evaluation

Stop evaluating AI tools based on demos and feature lists. Before you evaluate any AI tool for infrastructure, ask:

- What happens when this AI makes a mistake? (Risk of Failure assessment)

- How much effort would it take to fix that mistake? (Effort to Fix complexity)

- How much value does this create when it works correctly? (Value calculation)

- What's our CAIR score for this use case?

Using the CAIR Scorecard for Infrastructure Teams

To systematically evaluate AI opportunities, use a simple 1-5 scale for each component:

Value of Success (1-5 scale)

- 1 - Minimal: Small time savings, minor improvements

- 2 - Low: Moderate efficiency gains, some cost reduction

- 3 - Medium: Significant productivity boost, clear ROI

- 4 - High: Major time/cost savings, competitive advantage

- 5 - Very High: Transformational impact, game-changing results

Risk of Failure (1-5 scale)

- 1 - Very Low: Safe sandbox, easy to contain mistakes

- 2 - Low: Limited production impact, good safety nets

- 3 - Medium: Some production risk, manageable consequences

- 4 - High: Significant business impact if wrong

- 5 - Very High: Critical system risk, major outage potential

Effort to Fix (1-5 scale)

- 1 - Very Low: Simple undo, instant rollback

- 2 - Low: Quick manual fix, clear correction path

- 3 - Medium: Some detective work, moderate effort to fix

- 4 - High: Complex troubleshooting, significant manual work

- 5 - Very High: Extensive investigation, cascading fixes needed

CAIR Priority Levels

- High Priority (CAIR > 3): Invest immediately

- Medium Priority (CAIR 1-3): Pilot carefully with safety measures

- Low Priority (CAIR < 1): Avoid or delay until you can improve the score

Infrastructure-Specific Scoring Considerations

- System interdependencies: Changes ripple across services in unpredictable ways

- Compliance requirements: Some changes require approval workflows and audit trails

- Team skill levels: Consider the experience level of people who will use the tool

- Blast radius: How many customers/services could be affected by a mistake?

- Recovery time: How long would it take to fully recover from an AI error?

Stop Flying Blind on AI Decisions

Remember that SRE who spent six months evaluating an AI root cause analysis tool without getting any closer to a decision?

They were asking the wrong questions. Instead of "Is this AI accurate enough?" they should have been asking "What's our CAIR score for this use case?"

Let's apply the framework to their situation:

- Value: High (faster incident resolution saves hours of engineer time)

- Risk of Failure: High (wrong diagnosis extends outage duration by 45+ minutes, amplifying customer impact and revenue loss)

- Effort to Fix: Medium (engineers can abandon AI suggestions and start manual investigation, but time lost during critical incident)

CAIR = High ÷ (High × Medium) = Low to Moderate

This low-to-moderate CAIR score explains their hesitation perfectly. During incidents, the cost of being wrong isn't just inefficiency - it's extended downtime when every minute counts. The tool might save time when it's right, but the psychological barrier of potentially making outages worse creates serious adoption friction.

That SRE's six-month evaluation struggle could have been avoided with a simple CAIR calculation. The framework gives you clarity on why certain AI tools feel risky and others don't.

Next time you're evaluating an AI tool for infrastructure, use the 1-5 scoring method from this post: rate the value when it works, the risk of failure when it fails, and the effort to fix mistakes. The CAIR score will tell you whether it's worth your time.